(Or, Who’s Monitoring the Monitor?)

Everyone uses a monitoring system to understand what’s going on in their own environment and how it performs, but what about the monitoring system itself? The monitoring system also has its own tasks to perform, and obviously its own needs. Therefore even NetEye itself, while performing its duties, can be behaving well or not.

In terms of its monitoring module, NetEye itself can be used to get some useful metrics to understand its own current performance level. Sure, it’s common practice for a doctor to not check upon him- or herself: and of course, it’s not possible for NetEye to alert or advertise when its monitoring module (namely, its Icinga 2 engine) is down.

However, it can gather several performance indicators that provide us data about its stress level for later analysis. CPU load, memory usage, disk space consumption, number of processes, etc. are some basic indicators that can be easily gathered using the existing services and commands that NetEye provides, and you can do it about every minute or so.

To dig deeper, and to get data more often, you can use Telegraf Agents from Influxdata: from the beginning, NetEye has always been equipped with Telegraf, and since NetEye 4.19 an instance of this very agent has been configured to gather precise metrics about the health of the system running NetEye (either physical or virtual) at the operating system level.

Each NetEye instance, be it a Single-node Master, a multi-node Cluster or a NetEye Satellite, has a Telegraf Agent that makes available a variety of system-wide measurements every 10 seconds. And precisely because they’re gathered by Telegraf, a whole bunch of Grafana Dashboards are available to display them the way you like.

That all sounds pretty good, but the limitation of this approach is that this is all about the system’s performance as a whole. What about what NetEye is doing (or trying to do) to while it’s actively monitoring? What about Icinga 2 itself?

Icinga Instance Performance

To get more detailed data about Icinga2, there’s no other way than to ask Icinga 2 itself. Icinga 2 provides definitions for several commands right out of the box (ITL, Icinga Template Library), and among them you can find some that don’t refer to independent programs, but rather to some embedded routines.

The icinga built-in command is the one doing the actual work. Its short documentation presents it as mainly for the purpose of identifying outdated Icinga 2 instances running in your environment, but there’s a lot more under the hood that one can imagine: there are dozens of metrics that finely describe the status of each Icinga 2 endpoint. This way it’s possible to get a precise idea about what’s going on. Here’s a brief list of the most important (in my opinion) metrics:

api_num_endpoints,api_num_conn_endpoints,api_num_not_conn_endpointsmax_execution_time,min_execution_time,avg_execution_timemax_latency,min_latency,avg_latencycurrent_concurrent_checksactive_host_checks_1min,active_host_checks_5min,active_host_checks_15minactive_service_checks_1min,active_service_checks_5min,active_service_checks_15minpassive_host_checks_1min,passive_host_checks_5min,passive_host_checks_15minpassive_service_checks_1min,passive_service_checks_5min,passive_service_checks_15min

There are more metrics that show different aspects of each Icinga 2 instance’s work, but they’re either too basic (like the number of host/services managed by the instance, grouped by their status) or too advanced (like JSON RPC protocol statistics), to be covered in this blog post, so I’ll skip them. Anyway, the ones outlined in the list above are enough to grasp the status of your monitoring infrastructure, especially if you’re in a Distributed Monitoring scenario.

Endpoint related metrics

Metrics: api_num_endpoints, api_num_conn_endpoints, api_num_not_conn_endpoints

These three metrics exposing the number of API Endpoints explain how big your infrastructure is (in terms of monitoring elements) and how much of it is currently operative. They refer to the number of endpoints, be they Icinga 2 instances on NetEye Servers or Icinga2 Agents. Ideally, the number of api_num_conn_endpoints must be equal to api_num_endpoints , or (if you prefer), api_num_not_conn_endpoints must be as close to 0 as possible, but not 0 itself.

Check execution related metrics

Metrics: max_execution_time, min_execution_time, avg_execution_time, max_latency, min_latency, avg_latency

These six metrics refer to the checks the Icinga 2 instance has executed in its current lifetime. They can be put in 2 groups: the first half refers to the duration of the checks, the remaining half to their latencies.

The concept of execution time is fairly simple: it’s the time required for each check to terminate. Termination can come in the form of a “nice closure” (meaning the process terminates its own work gracefully) or in the form of a timeout (immediately followed by Icinga 2 killing the whole process tree). This family of metrics is useful to understand if we are making “fair” requests (in term of time consumption) to the Icinga 2 instance. Remember: the longer the checks takes, the lower the number of checks executed in the time unit, resulting in delayed monitoring.

Longer checks have higher chances of incurring a timeout and thus killing the associated Plugin Process, lowering the reliability ratio of your monitoring. Also, longer checks make the process of closing the current Icinga 2 instance take longer, extending the duration of the Deploy time, with all the due implications and issues. Therefore, it’s important to lower execution times as much as possible, because it negatively impacts your monitoring in various ways. If you want a number, don’t exceed 60 seconds for max_execution_time; as for avg_execution_time, try to get it under 20 seconds.

The concept of latency is a bit more complex. It’s based on the idea that Icinga 2 works like a scheduler: a check has its execution planned for a precise time, but that execution can in reality take place later. The delay between the planned execution time and the actual execution time is this latency. Latency is almost always higher than 0; so the problem lies in “how far from 0 are we”: the higher the value, the bigger the issue. High latency can be caused by several things, and generally it means the Icinga 2 instance is not able to keep up with the work. There are roughly 2 possible causes:

- Too many checks are expected to be executed, so some are left in the queue waiting for their chance

- All running processes on the server are simply demanding too many resources, so the whole system is not able to keep the pace

These metrics can expose a situation where too many checks are managed by a single endpoint, or where a single endpoint is not sized correctly. In any case, a max_latency higher than one or two seconds is really too much.

Concurrent checks

Metrics: current_concurrent_checks

The number of concurrent checks is another difficult object. Each Icinga instance can run at maximum a specific number of checks simultaneously. To be counted, a check can be executed directly by the Icinga 2 instance or by an Agent directly controlled by that same instance.

As described by the official documentation in the Global Configuration Constants table, it is capped at the constant MaxConcurrentChecks, which defaults to 512. It thus represents the number of active checks that the current Icinga 2 instance is executing simultaneously. You can check this number to understand how much work Icinga 2 is requesting of the underlying system, and for getting hints about how to tune it.

Number of active checks

Metrics: active_host_checks_1min, active_host_checks_5min, active_host_checks_15min, active_service_checks_1min, active_service_checks_5min, active_service_checks_15min

These metrics details how the monitoring work is being executed and distributed: you can see how many checks have been executed every 1, 5 and 15 minutes by the Icinga 2 instance divided by Object Type (host or service). This is a good indication of how many tasks this instance is carrying out.

Number of passive checks

Metrics: passive_host_checks_1min, passive_host_checks_5min, passive_host_checks_15min, passive_service_checks_1min, passive_service_checks_5min, passive_service_checks_15min

Similar to the number of active checks, these metrics show how many check results have been submitted to the Icinga 2 instance every 1, 5 and 15 minutes again divided by Object Type (host or service). This number has no relation to Active Monitoring: it refers to the number of calls to the Process Check Results command (via Web Interface or REST API) that have been made. This obviously includes the activities carried out by Tornado, so it’s a good measure of how much your Passive Monitoring is demanding from Icinga 2.

How to Get Icinga 2 Instance Performance

Getting these statistics is pretty easy: just create a Service Template that uses icinga as its Command and set the Run on Agent property to Yes. Then ensure you have a Host Object for every Icinga 2 instance you want to measure: be sure that each Host Object has the Icinga Agent property set to Yes.

Last, create a Service Object using the Service Template created before on each Host Object. If you’re using the Self-monitoring module that Wuerth-Phoenix Consultants set up on every NetEye deployment, this check is already in place.

How to Consult Icinga 2 Instance Performance

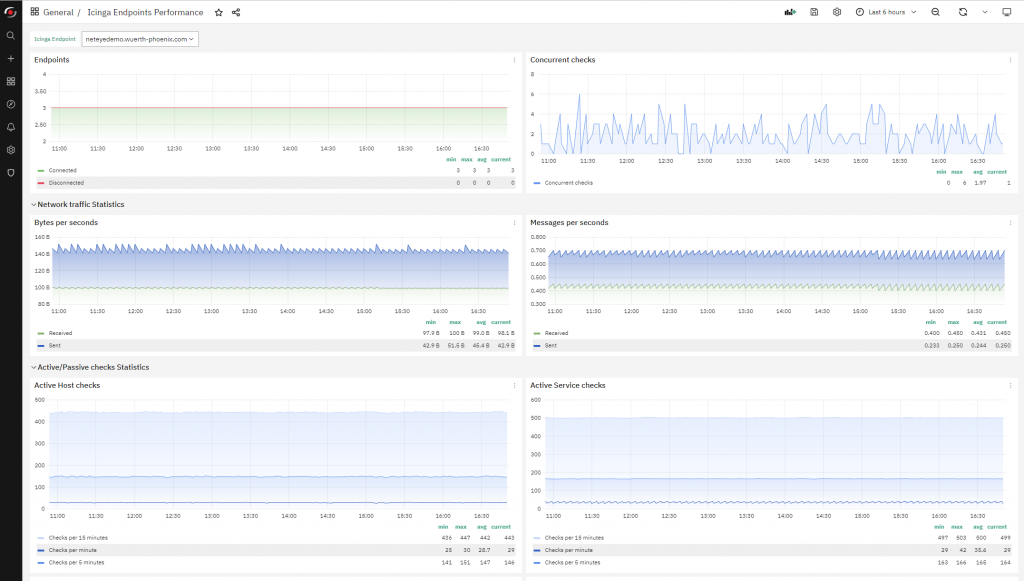

This data can be consulted quite nicely using the ITOA module. If you like, you can create your own Grafana Dashboard for displaying these statistics. Or if you prefer, our NetEye Demo environment provides a dashboard named Icinga Endpoints Performance that organizes and displays this data. Just log in to the Demo Environment, open this ITOA Dashboard and export it to install it in your own environment. Just remember: this Dashboard requires a MySQL Datasource that points to the Icinga 2 IDO Database.