Today I want to talk about a topic that, although it is (and always has to be) in the spotlight, is seeing its popularity skyrocket even more in recent months: how to handle Secrets and Personal Data with NetEye. And I’m not talking about the right way to use Director’s Data Fields, how to hide variables in the Monitoring Module or limit access to objects with Icingaweb2 Roles: that’s child’s play. I’m talking about a more subtle and completely different thing: how Icinga 2 manages secrets and how it distributes them within its infrastructure.

I want to point out the most basic mistakes people make while managing their Icinga 2 configuration, and of course I will try to propose some kind of solution. To better understand the root of the issue, I also need to provide you with the necessary basis of the Icinga 2 infrastructure, its configuration, and the logic behind configuration deployment.

As you can guess, this will be a very long and complex topic. This is primarily a design problem, so, even if it’s a long and complex story, everyone needs to understand it thoroughly. In short, take your time to read and understand it.

Defining the Scenario: Multiple Teams and Data Separation

Before talking about Secret Management, we must have a well defined scenario in mind. While security is a concern for everyone large or small, imagining a large company will make this scenario easier to understand.

Out of of all the teams in a public company, let’s choose these two:

- Research and Development Team (Team A); as its name says, it performs research and development

- Human Resources Team (Team B); it manages the files of every employee in the company, including personal data and salaries; in short, all the data that must be kept secret from all our (untrusty and envious) colleagues

Each Team has its own portal to access and manage the data they need; let’s call them the Projects Portal and the Employees Portal. For monitoring purposes, each Portal has its own monitoring credential(s) that allow read-only access to the environment. Let’s also assume both Teams are very large and so, to enable quicker response times for IT issues and daily management, each of them has its own IT Administrator who can take care of their own Team’s servers.

In this scenario, the data of each Team must not be accessible by other Teams because it can lead to several types of illegal situations, from violation of privacy to industrial espionage. Therefore, securing data access is a priority.

The Monitoring Scenario

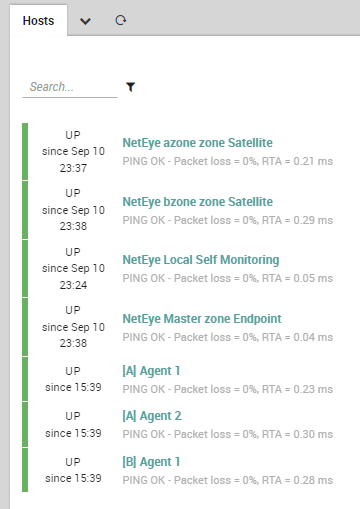

The most obvious and easy way to monitor these systems using NetEye is the following:

- Deploy a NetEye Master server and use it to centralize the management of monitoring

- Add a NetEye Satellite to monitor servers from Team A; it manages Zone

azone - Add a NetEye Satellite to monitor servers from Team B; it manages Zone

bzone

The entire group of servers serving Team A and Team B’s requests are represented here by 3 simple Host Objects monitored via Icinga 2 agents: two for Team A and one for Team B. Therefore, we will handle each of them as we would with any set of servers serving the same purpose. Of course, this is just one example, but let’s keep it simple for now.

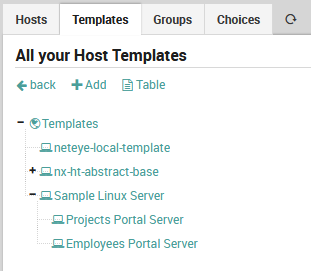

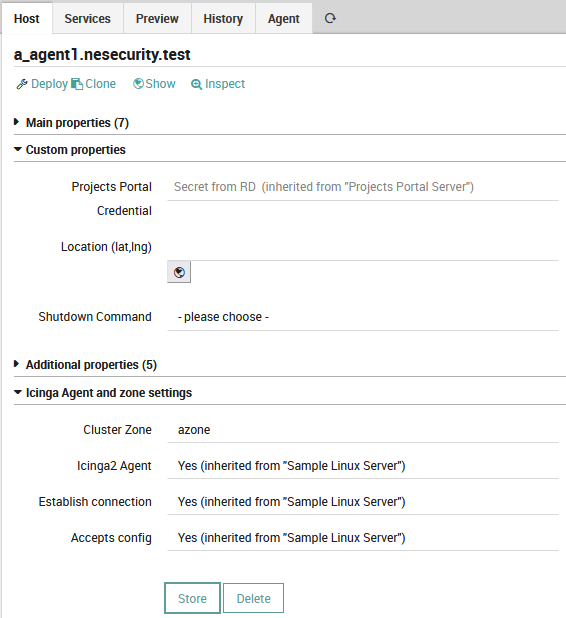

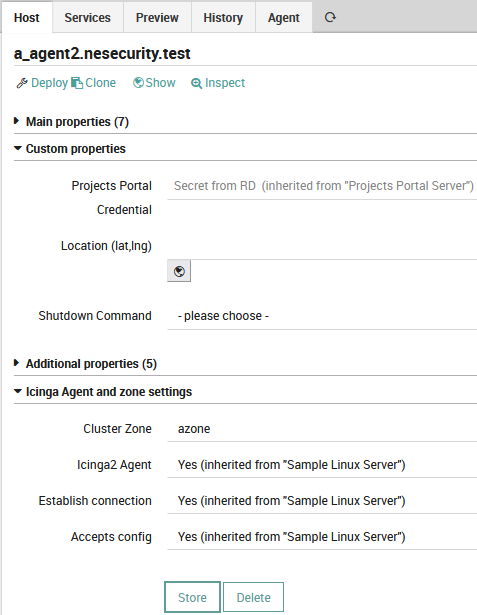

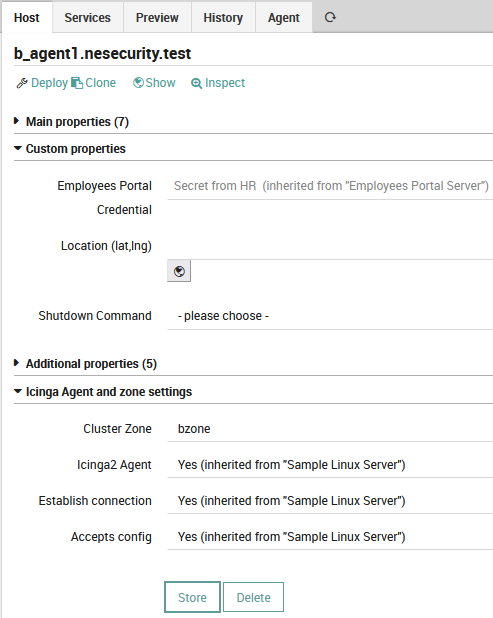

We assume all the servers are based on CentOS7, but everything I’ve written here is valid for all flavors of Linux and Windows: all OSes are equally affected by what will happen. As a logical consequence, our Host Template structure will have a root HT named Sample Linux Server, from which two new HTs are derived: Projects Portal Server and Employees Portal Server. Both of them have their own Data Field that allows us to fill in the required monitoring credentials.

Since we are managing a set of servers, the most obvious way to do this is to write the monitoring credentials directly into each HT: this way all HOs will automatically inherit the right credentials. And now, the finishing touch: The Cluster Zone is manually set on each Host Object.

If you’re wondering why I chose to set the Cluster Zone manually on each HO, please understand that this is not an uncommon approach: people prefer to update their HTs when they are trying to manage a large group of HOs, but when they make slight corrections, they tend to adjust properties directly on the HO.

Pointing out the Issue

Now that the layout of the monitoring part is explained, we can get straight to the issue at hand. I don’t want to hack anything here, I simply want to examine some files, so don’t get your expectations too high.

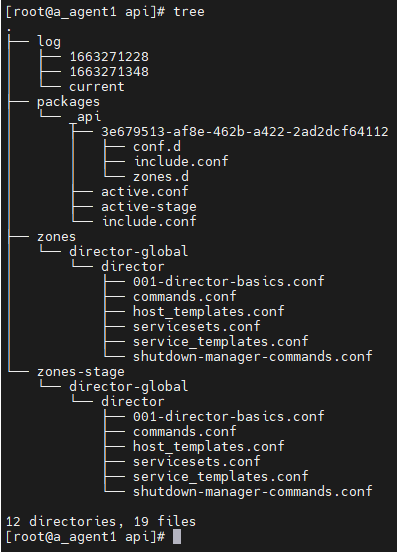

Because each instance of Icinga 2 dynamically receives instructions on what to do when Director deploys the Configuration, it’s clear that a part of that very Configuration is sent to each Icinga 2 Instance. Each Icinga 2 instance receives that Configuration and stores it in a directory known as DataDir (ref.: Directory Path Constants – Language Reference – Icinga 2). DataDir is usually /var/lib/icinga2, but NetEye uses a different value. Now, let’s log in to a Server to check the contents of its DataDir, say a Server from Team A. Inside its DataDir there is a subdirectory named api, which contains the following files:

Now, the contents of this subdirectory vary based on the content of the Configuration the instance receives, but the big picture doesn’t change: zones-stage is a staging directory where a new Configuration is stored until Icinga 2 loads it, while the running Configuration is inside the zones directory. One file immediately draws my attention: host_templates.conf. Here’s a short extract from that file:

...

template Host "Sample Linux Server" {

check_command = "hostalive"

max_check_attempts = "2"

check_interval = 3m

retry_interval = 1m

enable_notifications = true

enable_active_checks = true

enable_passive_checks = true

enable_event_handler = true

enable_flapping = true

enable_perfdata = true

volatile = false

}

template Host "Projects Portal Server" {

import "Sample Linux Server"

vars.projects_portal_credential = "Secret from RD"

}

template Host "Employees Portal Server" {

import "Sample Linux Server"

vars.employees_portal_credential = "Secret from HR"

}

...

As you can imagine, the Configuration sent to the HO has to contain the definitions of everything that’s required for that same HO to be monitored. Therefore, one might expect the credentials for the Projects Portal to be inside this chunk of data. What’s not expected is the additional presence of the credentials for the Employees Portal.

Before you start running amok screaming and crying, I want to tell you this: DataDir is highly secured and anyone without super-administrative rights (root on Linux and Administrator rights on Windows) won’t be able to access it. But if anyone does get these rights, it’s all over. Hackers aside, these kinds of rights are usually assigned to the most trusted people available, or those who already have administrative access to other sensitive applications, greatly reducing the need to exploit Icinga to commit some kind of fraud.

But let’s imagine this scenario: someone from a Software House is given the task of deploying a new application on a server belonging to the R&D Team. To do so, they need local administrator rights on that server. If this server is monitored as described above, this person can acquire an unexpected quantity of personal/sensitive data in a perfectly legitimate way. And this must be avoided at all costs.

Icinga 2, Zones and Endpoints

The way Icinga 2 manages and distributes its Configuration is closely related to how the Monitoring Architecture is designed: understanding the concepts of Distributed Monitoring (or just the building blocks) is key to understanding this behavior and finding a way to limit the unexpected as much as possible. Although reading everything in the official Icinga 2 Documentation is very useful, I’ll provide you with the short version.

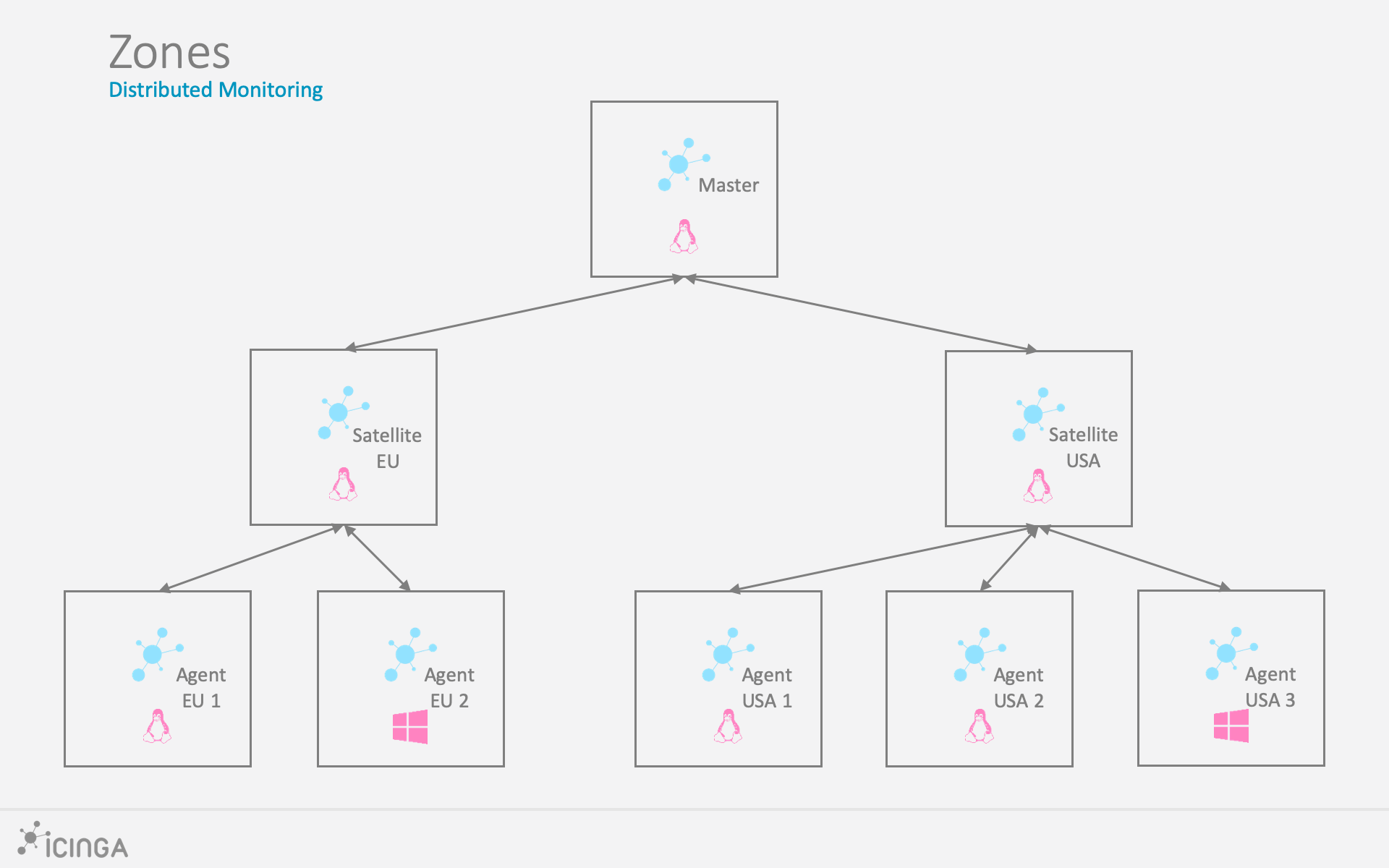

A Distributed Monitoring Infrastructure is composed of logical blocks named Zones. The Icinga 2 Documentation defines Zones as objects “used to specify which Icinga 2 instances are located in a zone”. I prefer a more abstract description, saying a Zone is a container inside which objects to be monitored can be placed together with their requirements. Objects to be monitored are HOs and SOs, and their requirements are everything that completes their definitions and can support their monitoring: Templates, Commands, Apply Rules, Dependencies etc.. Each zone can be managed by one or two Endpoints, where an Endpoint is, in its most practical form, an Icinga 2 process, be it the Master, a Satellite or an Agent. Each Zone can have a Parent Zone, enabling a hierarchy where the root is the Zone managed by the Icinga 2 Master Instance. Note that even if we can say that a Zone having a Parent Zone is a Child Zone, Icinga 2 has no such concept, meaning it is possible for a Zone to define its Parent but not its Children: they implicitly come by consequence.

We can then identify roughly three types of Zones, based on the role the managing Endpoint(s) has:

- Master Zone, the zone where the Icinga 2 Master Instance runs, by default known as

master - Satellite Zone, a zone managed by an Icinga 2 Instance that is not an Agent but is also not the Master

- Agent Zone, a special zone that is always managed by an Icinga 2 Agent

Even if NetEye has no official support for it, it is possible to build hierarchies with different depths on each branch, enabling two or more levels of Satellites. But this only defines whether and which Zones an Endpoint can contact: a Zone is pretty much like a “waypoint” that a chunk of Configuration must walk in order to reach its designated Icinga 2 Instance.

To be more precise, each Zone only knows about its own Objects and the Agent Zones that manages directly: there is no inheritance in Configuration distribution. The only exception is the Master Zone: it contains the whole Configuration and is responsible for dispatching the appropriate Configuration chunk to the right Zone. Therefore, it is safe to assume the Zone Master knows everything, and this fact cannot be changed.

This poses a question: if the Configuration is not distributed using inheritance, how can an Agent get details about the Templates it needs? There is a fourth flavor of Zone, called the Global Zone. Global Zones are zones synced on all Endpoints across the entire Monitoring Infrastructure. The most famous one, that is, the only one with a different behavior than the others, is director-global: it contains by default everything that is not an HO or SO and is not explicitly assigned to any other Zone. This is the way Icinga 2 and Director overcome the strict boundary of a Zone, allowing for easier management of Templates. If Zone director-global didn’t exist, all Templates would have to be manually redefined on all Zones, making Distributed Monitoring a real mess.

So just in case you haven’t yet clearly understood it, here’s a brief summary about Global Zones and director-global:

- Global Zones are distributed to all available Endpoints, regardless of their role

- Objects can be assigned to Global Zones the same way they are assigned to other Zones: using the property

Cluster Zone, available on each Object - Objects that are not assigned to any Zone will be implicitly assigned:

- To Zone

masterin they are an HO or SO - To Zone

director-globalin all other cases

- To Zone

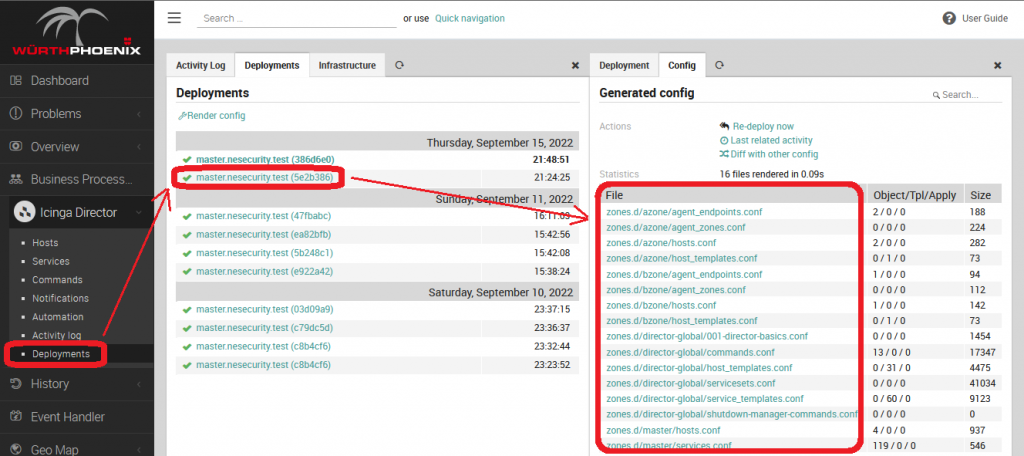

In case you want to take a look at the Configuration files to confirm this, you don’t need to search the Data Dir of each Endpoint: you can peek inside Configuration Files directly with Director. Just open the details of a deployment from the Web Interface and move to the Config Tab.

How to Limit Disclosure of Secrets

Given all these concepts, it’s possible to look at the issue in a different way to better understand what’s going on. It’s a combination of several factors:

- Zones are directly assigned to Host Objects

- Secrets of Host Objects are inherited by Host Templates

- Host Templates have no Zone, implicitly meaning they will be assigned to

director-global

The result is: secrets are written into a Global Zone, then sent to all available Endpoints.

Addressing this issue is not so simple. As always with Icinga 2, this is pretty much a design error, and it must be addressed by a NetEye Architect using a well-made design. Now, everyone must have their own way to solve this issue, so I will only point out some guidelines for you.

- Templates that are not assigned to any Zone (or are assigned to a Global Zone) must not contain either Personal data or Secrets; you can associate the required Data Fields, but do not fill in any Custom Variables

- The best place to assign Secret or Personal data is on an HO or an SO: this way, sensitive data is available only where strictly needed (for the Agent Endpoint and its Parent Endpoints, that is the bare minimum)

- If point 2 is not applicable, make sure Templates containing Secrets or Personal Data are explicitly assigned to a Zone that is not a Global one

These guidelines, which can be summarized by the phrase “Sensitive Data must be enclosed in a Non-Global Zone“, can be very difficult to cope with. It’s all about the size of each Zone: big Zones mean data is shared between many systems, while small Zones mean an increase in management effort. Creating Zones with the right size is difficult, and the difficulty of this Task increases with both the size of your Monitoring Infrastructure and the demands from the Security Department, so there is no specific rule.

Maybe the most obvious way to manage this is to match the perimeter of each Security Domain (whatever it is, as defined by your Security Department) with the perimeter of a single Icinga Zone; otherwise, sensitive data will be shared between different Security Domains. If this looks like a very deep labyrinth to you (or Hell on Earth, if you prefer), the only alternative strategy that can guarantee a 100% success rate is to assign all sensitive data directly to an HO. But this can be done only if all of them are managed using Director Automations, implying that you must have a really complete and well-maintained Asset Management system.

Or you can do it manually, of course.

These Solutions are Engineered by Humans

Did you find this article interesting? Does it match your skill set? Our customers often present us with problems that need customized solutions. In fact, we’re currently hiring for roles just like this and others here at Würth Phoenix.