Let’s assume we have the following reference scenario, which we’ll use just for simplicity: A fictitious commercial freight business moves large volumes of goods all over Europe. It has two legally registered offices, one in the Netherlands (Western Europe) and the other in Ireland (Northern Europe), where the accounting departments are located.

The business’ board of directors decides to shift most of the IT infrastructure to the cloud, since running on-premise IT infrastructure isn’t seen as the main focus of the business, and there’s a desire to limit capital expenditure. The leadership decides that IT support must be contracted out to a trusted implementation partner on a given budget, subject to annual review.

Requirements

The implementation partner is tasked with the following requirements:

The accounting software

The software is a custom application that the business has developed over the years. It’s maintained by a development team internal to the firm, and must be run on either Linux or Windows OS. The business is unwilling to shift the application to a PaaS service, at least for the foreseeable future, as it would require considerable effort and cost. Moreover, the business risk attached to a redesign is deemed excessive because of the legal and tax obligations.

- It must be run on cloud infrastructure, and be available and accessible 24/7 * 365 from anywhere except for planned maintenance windows

- It must be accessible only by vetted individuals onboarded to the organization by the HR department after careful screening

- It processes large amounts of digitized legal documents such as shipping documents, freight contracts and other business-related and tax-related unstructured data

The data

- Digital assets can be uploaded only from authorized sources

- Digital assets must be stored safely and permanently and be subject to backup

- Documents must be immutable for several years

- It must be guaranteed that data cannot be overridden, deleted, lost or subjected to tampering or encrypted by ransomware

- The data must not be exposed on the public Internet

- The data must only be accessible by the accounting software, and with the least possible latency

- The data must be protected from regional outages, and the accounting software must remain operative from at least one of the two headquarters, even during regional outages

The budget

The overriding directive is that the implementation must keep costs to a minimum while achieving all the requirements above.

The Implementation Proposal

There are several ways to implement a solution to fulfill these requirements. The following primarily focuses on simplicity and the overriding directive to minimize costs. In the real world, the implementation partner would need to devise a few viable implementations and present a comparative analysis to their client, highlighting the pros and cons of each so that the business and the implementation partner can agree that one of the proposed implementations is acceptable to the company.

The design phase is often followed by a POC implementation; therefore, those implementations that lend themselves to being easily prototyped are more likely to be favored.

Secure Data Storage

Azure Storage Accounts can be used to meet the following requirements:

- It can hold unstructured data, i.e. digitized documents as binary objects (blobs)

- It can also hold semi-structured data such as files or tabular data

- It features WORM (Write Once Read Many) immutable storage with time-based retention policies and legal hold policies compliant with SEC 17a-4(f), CFTC 1.31(d), FINRA, and other regulations

- Setups can be implemented in various ways, i.e., assets can be uploaded only from authorized digital sources, in the simplest form, by managing limited access SAS tokens or with more advanced setups that will be described in a later example

- It provides built-in BCDR (Business Continuity and Disaster Recovery) features such as Azure Blob Backups and data redundancy for blobs, and files within paired regions

- It provides automatic object replication to a paired region, which can be used not only as a BCDR implementation but also to reduce latency across regional access, especially in read-only scenarios such as this case

- Access to the storage account from the public Internet can either be eliminated or restricted only to specific networks

- It is a cost-effective high-volume data container with built-in features for inexpensive long-term data retention via its four access tiers – Hot, Cool, Cold and Archive, with minimal management overhead, thanks to lifecycle management policies

Secure Software Access

The requirements in the section “The accounting software” above can be summarized as follows for the purpose of this simple POC implementation:

- HR must control the identity to which access is granted

- Only the tenant administrators can grant access to these vetted identities

- The software must run on Virtual Machines deployed in the cloud infrastructure

- There is no mandate to explore refactoring options to run the software as a PaaS service

The strongest of these requirements clearly refers to the identities to which access will be granted, where the software must be available over the Internet, but only to a selected pool of controlled identities. Although this is a weak point in security practice, it is not uncommon for organizations at the beginning of their cloud journey to temporarily settle for sub-optimal solutions as long as they are feasible, fit-for-purpose and within an agreed budget.

In this case, an Azure Virtual Machine with an SKU determined later, based on the actual workload, may be exposed over the Internet with a public IP, with access protection implemented at the tenant level. This can be done thanks to features provided by Microsoft Entra ID, the identity provider, and its integrations with the Azure Virtual Machine offering.

- Sign in to a Windows virtual machine in Azure by using Microsoft Entra ID, including passwordless

- Sign in to a Linux virtual machine in Azure by using Microsoft Entra ID and OpenSSH

It’s possible to deploy the Virtual Machine with an agent extension so that RBAC (Role-Based Access Control) administrators can govern who is allowed to log on to each VM (via DPR for Windows or SSH for Linux) from identities available in the tenant Microsoft Entra.

Two RBAC roles apply to this scenario, according to the functional role a user should have on the VM:

This design can be improved in several ways. However it’s worthwhile to give a few examples and split them into two categories according to the requirement of limiting the cost of the solution:

Improvements that do not produce additional costs:

- The Network Security Group (NSG) may be designed to restrict the traffic allowed to and from the VM so that access and operation of the custom accounting software are maintained without unnecessary openings for attacks. This can be implemented at virtually no additional cost and can be very effective in reducing the surface area of an attack.

Furthermore, the security strength provided by controlling the network traffic at the NGS (layer-4) could be increased if the company could consider altering one of the requirements. Specifically, rather than requiring access to the VMs from any IP address, the allowed IP address range could be restricted to IPs owned by the business, making it impossible for any attack to stem from the public Internet.

The only way to attack these VMs would be from a compromised agent located within the owned IP range, and this would have to happen in conjunction with a compromised identity, which is an unlikely scenario. It would, in fact, require a high level of engineering on the part of the attackers, which would not likely match the value of the targets.

Improvements that produce additional costs:

- Azure Bastion may be deployed as a regional service. It would add some moderate cost, but significantly reduce the risk of attack to the workload VMs, as these would no longer need a public IP address. It would also reduce the operational burden as Azure Bastion is a managed PaaS service, and the NSG for the workload VMs can be further tightened and automatically managed.

- Azure Firewall may be deployed on the Virtual Network, which would decisively improve the security posture, but also introduce costs for the solution itself as well as operational costs.

Improvements that produce additional costs and require some more advanced Network concepts:

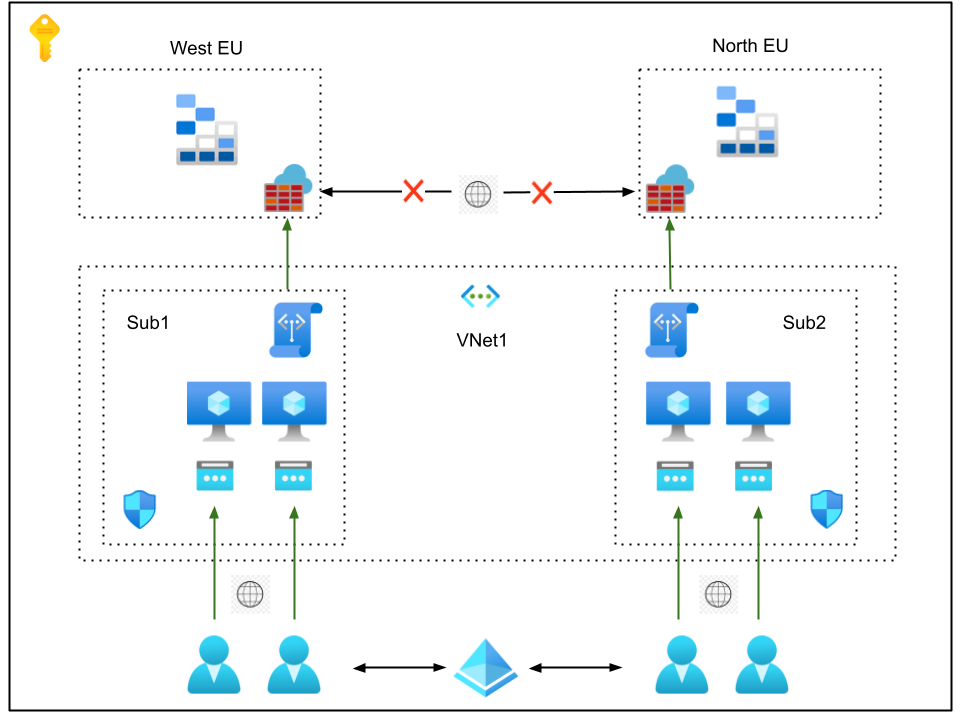

Design Concept Summary

- Users log on to the VMs with the accounting software with their tenant identities vetted by HR and controlled by the tenant administrators

- Microsoft Entra ID is the identity provider; MFA can and should be required

- The VMs are RBAC-protected and made available only to the approved tenant identities

- Traffic can be controlled at the NSG level

- Limiting the IP range to the on-prem IP range would strengthen security at no cost

- Sub1 and Sub2 have access to the Storage Account (SA) via the Service Endpoint

- Encrypted Traffic between Sub1, Sub2 and the Storage Accounts is confined to the Azure Backbone

- The SA can be set to use read-access geo-redundant storage (RA-GRS) or read-access geo-zone-redundant storage (RA-GZRS), which would provide a solid automatic BCDR implementation and reduce latency from the paired North Region

- The primary Storage Account in West EU can and should be backed up as well

- The content of the primary Storage Account should be subject to WORM policies to protect the data from accidental or malicious alteration according to legal compliance standards

- Data can be added to the storage accounts from designed VMs and devices deployed to Sub1 and Sub2, accessed and operated only by the vetted identities

- In cases where it would be required to add data from devices lying outside any controlled network, the shared access signatures mechanism (SAS) could be used if properly implemented and managed

Service Endpoint

This is an essential part of the design as a Service Endpoint allows subnets (Sub1 and Sub2) in a Vnet in Azure to advertise their private IP range to a PaaS service such as a Storage Account (other PaaS services are also possible, such as Azure SQL Server and many others).

This has two consequences that considerably improve security at no cost:

- The PaaS Service, the Storage Account, can be isolated from the public internet via its Service Firewall by removing public access. This meets the design requirement that the Data Store must not be accessible from the public Internet. It will still be accessible from any IP address within the advertised subnets.

- The Azure DNS Service will route all traffic from the advertised IP addresses to the target PaaS service, and traffic will remain encrypted on the Azure Backbone Network and off the public Internet. This improves security as well as performance through lower latency.

Conclusion

This POC and some variations of it meet all the business requirements and strive to implement the functional, operational and security benchmarks on a reduced budget.

It’s often the case that reference implementations leveraging cloud services, and available online as design resources, may look overly complex and expensive, especially when they intersect with the demanding security standards necessary to meet regulations and certification, such as the various ISO initiatives.

This POC shows that, with the proper knowledge, it’s possible to choose a basket of cloud features and services, extracted from a selection of reference implementations, to quickly reach the targeted benchmarks within acceptable cost ranges and within reasonable time frames. Cost and implementation time can undoubtedly be kept much lower than on-prem implementations, as these might require purchasing and outfitting additional, specialized hardware or software.

Furthermore, cloud implementations provide some flexibility and built-in future-proofing in that it’s possible, even after their initial release, to integrate new services, leverage new features introduced in services already in use, or simply tweak the designs to maintain or even incrementally raise the quality of the solution, while remaining within the same overall budget.

This design may be used as a starting point for other articles in this series to explore the implementation options in more detail and adjust them to fit changing requirements and budgets.

These Solutions are Engineered by Humans

Did you find this article interesting? Are you an “under the hood” kind of person? We’re really big on automation and we’re always looking for people in a similar vein to fill roles like this one as well as other roles here at Würth Phoenix.