Architecting a Portable Red Team Engine

This is the first article in the RTO series

The Problem

Red team and penetration testing activities are full of repetition: the network scans, reconnaissance, OSINT collection, and routine validation tasks are all necessary, but they’re also time-consuming and error-prone when executed manually.

Over time, most teams end up with a zoo of scripts, half-maintained tools, and bespoke glue code that works… until it doesn’t: unfortunately, this was precisely the situation our team found itself in.

The proprietary software we internally referred to as RTS (Red Team Script) had served us faithfully for years, reliably automating large parts of our operations. But now it was beginning to show clear signs of fatigue, gently suggesting that its time was nearing an end.

What we casually called a “script” had, after years of incremental additions, evolved into a massive monolith: difficult to maintain, painful to debug, and riddled with spaghetti code resulting from rushed, ad-hoc changes made under pressure.

Moreover, RTS was never designed with reproducibility in mind. As a tool responsible for orchestrating multiple penetration testing utilities, it had an unfortunate tendency to fail in different and creative ways depending on the specific configuration of the red team operator’s machine or server on which it happened to run.

Finally, RTS implemented everything directly in code: integrations with third-party tools, execution flows, and glue layers were all handcrafted via dedicated and hyper-opinionated business logic: every new capability came at the cost of additional complexity and ownership.

In short, it was time to retire RTS and allow it to be reborn in a more modern form.

The Solution

Taking the limitations of RTS to heart, we laid out the initial desiderata for its successor. The new platform had to:

- Inspire confidence within the team, both in daily usage and long-term maintenance

- Accelerate report production and post-engagement deliverables

- Provide a standardized baseline for recurring activities such as VA, VAPT, and WAPT

- Be easily extensible and integrable with new tools

- Be portable, allowing execution on laptops, customer environments, or a centralized server for long running activities

- Remain as uncommitted as possible

- Be structured and scalable by design

- Offer well-organized and predictable output handling

- Be fully versionable, with tool versions explicitly pinned

- Be properly documented

After weeks of brainstorming, discussions, and carefully weighing trade-offs, RTO (Red Team Orchestrator) was finally born!

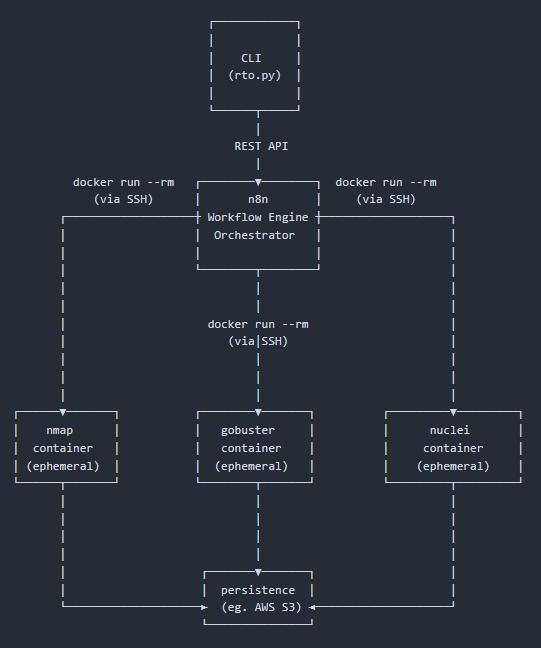

RTO is a plug & play, infrastructure-level engine designed to automate repetitive pentesting tasks through n8n.

Here’s the idea:

The only prerequisite is for the tool to be able to run on Docker, so it basically needs to run on a Docker host.

The whole architecture is defined and managed via Docker compose, and the core component of the engine is a container running n8n. The workflows executed by this component are versioned within an RTO repository, allowing for a GitOps approach.

The workflows can leverage multiple additional containers: each tool can be packaged as an individual container, or a single container may bundle multiple tools. Communication between the main orchestrator (the n8n container) and the tool’s containers is handled via SSH nodes, allowing the n8n container to execute Docker commands on the underlying host.

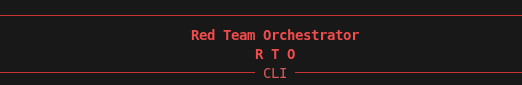

Since n8n workflows can also be triggered externally via webhooks and HTTP calls, the engine becomes fully programmable and can be wrapped behind a command line interface.

Scans still store their results locally, since the n8n container requires a data volume. However, to enable synchronization across team members, additional workflow steps are added to save results to external services, such as AWS S3, or directly on Sysreptor, the report automation platform we’ve chosen to use.

Here’s a high-level architecture diagram:

The advantage of leveraging n8n lies in its ease of workflow creation, versioning, and maintenance, along with the ability to aggregate multiple workflows. On top of that, it offers an extensive ecosystem of pre-built integrations for third-party tools and services ranging from cloud providers to AI platforms and beyond.

Furthermore, as previously mentioned, once the pentesting workflows have been completed, tested, and verified as functional, they can be easily exported using a simple Docker command. At that point, they can be pushed to Git and synchronized by all Red Team members upon the next pull, effectively enabling a de facto GitOps and Infrastructure‑as‑Code approach.

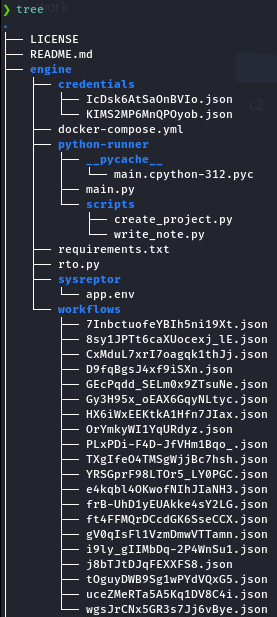

Here’s an overview of what the early project folder structure looked like:

How We Use RTO

The high-level user procedure for utilizing the tool is as follows:

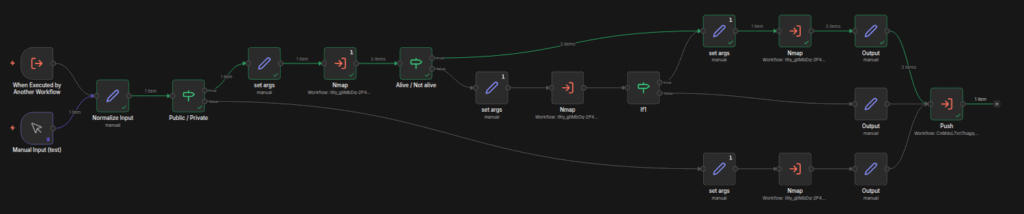

- New workflows are created and tested directly through the n8n UI:

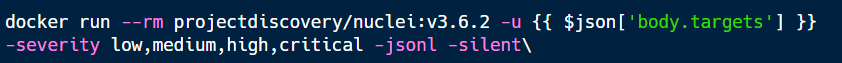

When a workflow requires the use of an external pentesting tool, it’s executed within a container with a pinned semantic version, ensuring reproducibility:

- Once a workflow is stable, it’s exported and versioned; from that point onward, the workflow can be fully triggered via the RTO CLI:

- When a new campaign begins, the first step is to create the project in a shared system.

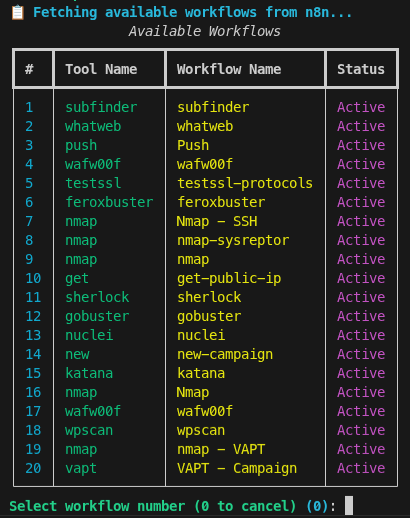

The choice of platform depends entirely on the pentesting team and the tools they use. - Subsequently, the workflows are executed. Scan results are automatically processed through parsers and posted as notes in the corresponding project. This setup allows multiple consultants to work in parallel on the same task efficiently:

Conclusion

In this first article of the series, we presented the key requirements and challenges faced by our team, as well as the research and development processes that ultimately led to the creation of RTO. We explored how our need for efficiency, automation, and collaboration drove the design of the tool, and highlighted the iterative experimentation and problem-solving that shaped its development.

This series aims to provide a comprehensive look into our engineering journey, sharing insights, lessons learned, and the practical approaches we employed to streamline complex workflows. If you’re interested in the intersection of innovation and offensive security, stay tuned as we continue to take you through the evolution of RTO and demonstrate how it can transform technical processes for Red Team operators.

These Solutions are Engineered by Humans

Did you learn from this article? Perhaps you’re already familiar with some of the techniques above? If you find cybersecurity issues interesting, maybe you could start in a cybersecurity or similar position here at Würth IT Italy.