Elastic Integration with Huge Memory Usage? Keep That Host Accessible!

In some environments, Elastic Agent integrations can unexpectedly consume excessive memory. This can be due to various reasons: misbehaving integrations, memory leaks, or simply under-provisioned hosts. When this happens, the Linux Kernel may invoke the OoM (Out of Memory) killer of systemd, terminating the Elastic Agent service and usually, disrupting data ingestion.

How to Detect the Issue

System Logs

If your Elastic Agent is being killed unexpectedly, check the system logs:

# grep -i out.of.memory /var/log/messages{-*,} |tail

...

/var/log/messages-20250605:Jun 5 14:21:11 HOSTNAME kernel: Memory cgroup out of memory: Killed process 3481 (agentbeat) total-vm:35608848kB, anon-rss:28517004kB, file-rss:69696kB, shmem-rss:0kB, UID:0 pgtables:62396kB oom_score_adj:0This is a clear sign that your Agent exceeded available memory and was forcibly terminated.

System Load

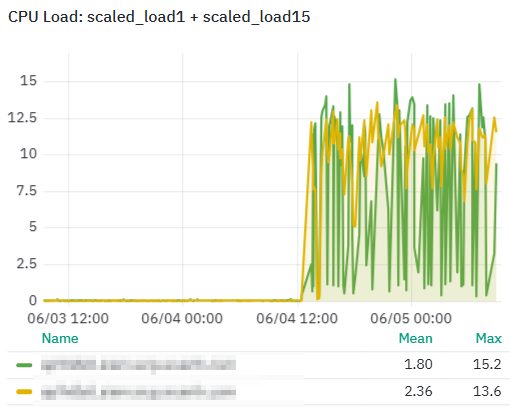

Choose any method to monitor the system load; for example the CPU load check in NetEye Monitoring:

How to Prevent Unresponsive Hosts

The host becomes inaccessible due to huge loads. To safeguard your system, use systemd‘s MemoryMax directive to cap the Elastic Agent’s memory usage. A good rule of thumb is to reserve 3 GB for the OS and other services, and allocate the rest to the Agent.

# create directory for elastic agent service custom values

mkdir -p /etc/systemd/system/elastic-agent.service.d

# for a system with 16 GB reserve maximum 13 GB for elastic agent

echo -e "[Service]\nMemoryMax=13G" > /etc/systemd/system/elastic-agent.service.d/memorymax.conf

# tell systemd to accept new config

systemctl daemon-reexec

systemctl daemon-reload

systemctl restart elastic-agent.service

This will keep the agent within safe memory limits, improving system stability and reliability.

Next Steps

The above suggestions only allow you to continue to access the machine that hosts the Elastic Agent. You’ll still have to investigate the underlying reason that it’s using too much memory.

If you want a quick solution, try to “throw money at it”, or in this case, throw memory at it: give the machine an additional 64 GB and limit the service to 64 GB. In my experience, 64 GB is usually enough for every process.

I found this behavior (using a lot of RAM) with the Elastic MISP integration. In the end, they gave the Elastic Agent service 45 GB, with the MISP integration subprocess using around 32 GB max.

These Solutions are Engineered by Humans

Did you find this article interesting? Does it match your skill set? Our customers often present us with problems that need customized solutions. In fact, we’re currently hiring for roles just like this and others here at Würth Phoenix.