NetEye and Space Missions: How Work in the NetEye R&D Team Contributed to My Research Project

Having recently completed an internship at a lab at the University of Trento, I noticed how my work at Wuerth-Phoenix has greatly influenced the development of the research I conducted during my internship. In fact in this post I’d like to share my completed project and how it was influenced by my work in Wuerth-Phoenix’s NetEye R&D team.

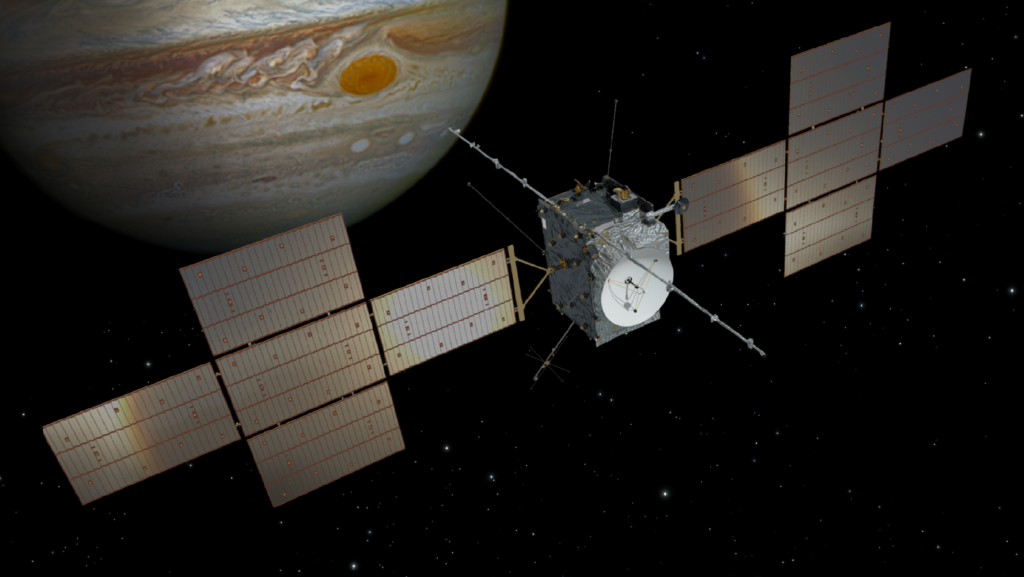

My internship work involved the creation of a PoC for a framework for analyzing the data produced by the JUICE mission.

Taking a few steps back, the JUICE (JUpiter ICy Moons Explorer) mission aims to investigate, through detailed observations, the gas giant Jupiter and three of its moons (Ganymede, Callisto and Europa) composed mainly of iced-over oceans. The spacecraft will use a suite of sophisticated remote sensing instruments to classify the three moons as planetary objects by measuring several parameters involving their surface, atmosphere and some electromagnetic characteristics.

One of the sensors on board JUICE is the RIME (Radar for Icy Moon Exploration) active ground-penetrating radar, commissioned by the ESA from a lab at the University of Trento (a lab where I did my actual internship). Just to give some indication, the radar will provide mappings of what lies beneath the surface of Jupiter’s moons, scanning down to a depth of 9km below the surface. The sensor will then send a telemetry stream to be processed and analyzed to extract information and look for possible water reserves (and maybe even life, who knows) beneath the surface.

My contribution to this was to think of an optimized distributed architecture in charge of processing and analyzing the incoming telemetry from the RIME sensor, trying to meet as best as possible several requirements such as:

- Robustness: Unfortunately, the probe will only reach Jupiter’s moons in 2031: this means that the system will need to have a structure that can withstand time and be easily upgraded, using components and technologies that can potentially even be replaced if dismissed.

- Scalability: System scalability is critical in several respects. The most important is closely related to the requirement for robustness, in fact the lab servers must be able to be expanded over time without too much difficulty or major changes to the framework.

- Flexibility: The system must be able to accommodate a wide variety of processing and processing algorithms since the science team may need simple conversions and renderings of radagrams as well as analysis algorithms that also use machine learning algorithms for recreating the scanned environment, using data combined with other sensors on board the probe. In addition, the algorithms that are used by the research team are structured in pipelines with processing blocks that can have dependencies on each other as the data flow progresses.

Just as I was thinking about how to develop a system that could meet all the requirements, I began to learn more about the technologies used by the DevOps team in the R&D office. Again, as in the project I was developing, technologies for distributed systems based on containerized applications are used, but with totally different purposes: the main objective of the infrastructure used by our DevOps is in fact to provide a continuous integration (CI) service, which facilitates the implementation, development, testing and even delivery of our product. On the other hand, the framework I designed will be used to process and analyze telemetries from the RIME sensor.

Despite the totally different use of these tools, there is a great similarity between the pipelines used by CI processes and the telemetry processing and analysis pipelines. Although tools like Tekton were not designed for use in data processing, for the specific case of the study I was pursuing, it seemed to be the optimal choice. My study therefore focuses on how the use of technologies created to support CI/CD (Continuous Integration / Continuous Delivery) systems can be used to meet the requirements of the JUICE mission data processing center case.

Working at the R&D office helped me tremendously in the research and implementation of my PoC for the framework: not only did I begin to learn about many new and cutting-edge technologies that could also be applied to my research, but I also learned how to organize my work and especially how to improve the development process through the application of methodologies and best practices that make it easier and, while they may seem difficult to use at first, can greatly speed up the work.

These Solutions are Engineered by Humans

Did you like this article? Does it reflect your skills? Würth Phoenix is always looking for talented, enthusiastic individuals to help us drive our business. In fact, we’re currently hiring for many different roles here at Würth Phoenix.