GLPI: Automated Inventory Without Using GLPI Agents

Key takeaways:

- You can use the native inventory format to import assets from any source

- Inventory import rules apply

- The lock mechanism works as for GLPI agent imports

- The imports are limited to the objects and properties currently imported also by the glpi agent and its accessory tools

A comprehensive asset management database must necessarily be fed both automatically and by hand. Of course, the more data you can import automatically, the more the manual activity can focus on improving coverage, on adding responsibilities, descriptions and other data that is not (yet) available digitally, and of course on cross-checking and improving data quality.

GLPI Agents

GLPI provides a powerful inventory agent for most operating systems, facilities for the inventory of ESX and Nutanix virtualization systems, and an SNMP-based inventory for network devices and printers.

However, this list is far from complete of course, and most importantly, in several environments it’s not possible or even permitted to install these agents. Here I’d like to show you how to import assets through GLPI’s versatile native inventory interface, without using GLPI agents.

Adding Inventory from Different Sources

The data could be retrieved from other agents which are already installed (e.g. an antivirus agent or a monitoring agent), or provided by centralized management tools.

We even implemented an inventory of virtual machines in a vSphere environment. You could say that the GLPI agent “ESX inventory” tool already implements this functionality. However, it delivers hardware details only for the hosts. We retrieve lots of VM details from the vSphere API, beyond CPU and RAM: the drives with total and free space, the operating system, the network ports and the UUID to link the VM to its host (which was retrieved by the ESX inventory tool).

The GLPI native inventory server accepts inventory files in XML and JSON format, through a simple HTTP POST request. We use JSON, which is simpler to generate, read and modify manually. A detailed schema of the accepted JSON can be found at https://github.com/glpi-project/inventory_format/blob/main/inventory.schema.json.

To make it simple, just look at the JSON file generated by a GLPI Agent. You’ll find practically everything there that’s defined in the schema, from computer hardware to the OS, installed applications, database instances, virtual machines and virtualization hosts. At https://github.com/glpi-project/inventory_format/tree/main/examples you’ll find multiple examples.

However, this is at the same time its main limitation: the format doesn’t support any additional data which is not already available in a standard agent. For example, you can define the “location” of a network device (because it’s a standard field in the SNMP interface), but for a computer inventory the “location” field is not supported at all, because it’s not usually defined anywhere in a PC or server.

If you have a source which also contains the location, you need to store it e.g. in the comment, and then, inside GLPI, you try to copy it to the location field with a business rule.

We wrote scripts, which are scheduled daily, read the data from vSphere or from other 3rd party computer management interfaces, and generate the aforementioned JSON files.

Such JSON files are natively sent to the GLPI server by the glpi-agent, but every agent installation contains also a tool called glpi-injector. The glpi-injector just takes a single JSON file or a directory full of JSONs and pushes them to GLPI’s native inventory interface. Just try it with any GLPI agent JSON file, e.g., with a glpi-agent installed on the VM running the GLPI web interface:

glpi-injector -f examplefile.json –url https://127.0.0.1/glpi/front/inventory.php –no-ssl-check

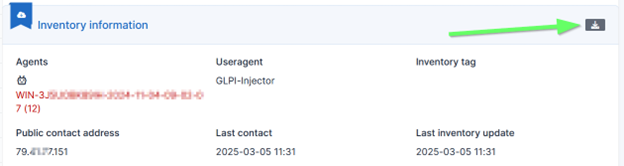

Maybe you don’t know how to download a JSON file from GLPI: Just click on the download icon in the bottom right of the main tab of any computer. Note that this is really the JSON that was uploaded. It doesn’t contain any change done manually afterwards.

JSON injection is currently supported only for the following Item types: Computer, Phone, NetworkEquipment, Printer, and “Unmanaged” assets, whose type is not yet defined, and that can subsequently be converted by hand to one of the four aforementioned types.

Benefits and Drawbacks

Benefits of using JSON injection:

- The full inventory can be imported at once from a single file. Conversely, for importing an asset through the GLPI REST API, you would need a huge number of requests for reading, updating and/or creating each single property of an asset, such as each memory module, operating system, and software.

- The import is triggered from outside GLPI and can be scheduled at the OS level. This isn’t possible with Teclib’s “datainjection” plugin that imports CSV files.

- The rules for import and link equipment apply, so you could for example create only computers with a hostname that starts with “IT”, or update only computers that are in status “active”.

- The “lock” mechanism applies, so manual changes are preserved, and global locks can be set.

Drawbacks or missing features:

- The JSON schema is limited, some properties and asset types are missing and there is no support for additional fields. Let’s hope this will improve with GLPI11.

- No priority rules can be defined if a single asset is updated with two inventory files from different sources. To avoid concurrent updates, we currently run a script that checks if a host already has an associated GLPI agent. If this is the case, the JSON file will not be created at all.

- The “lock” mechanism cannot be configured independently for different inventory sources.

Which sources would you like to try to tap with this mechanism?

These Solutions are Engineered by Humans

Did you find this article interesting? Does it match your skill set? Our customers often present us with problems that need customized solutions. In fact, we’re currently hiring for roles just like this and others here at Würth Phoenix.