In December 2022 we decided to completely restructure the code of our User Guide. Previously, each project contained its own documentation, but this led to very difficult and time-consuming development having the code scattered across more than 40 repositories. Furthermore our contributors are not necessarily NetEye developers, or even developers at all. Setting up a web server, compiling RPM packages and installing them to perform local tests was not an easy task.

For these reasons we decided to move all User Guide code to a single repository, making it easier to handle for both developers and contributors. And since we were already rethinking our User Guide pipelines, we also decided to find a way to simplify the entire build process to make it more maintainable.

Previously, we had one pipeline in charge of RPM builds, another one responsible for building the docker image containing the web server and the compiled code, and finally a service in charge of periodically updating the docker image on our User Guide machine. This process actually worked for several years, but was also convoluted and tricky to change.

Since we’ve had our OpenShift Cluster in place and we’ve been experimenting with it for several months, we decided to exploit this huge refactoring to try to rebuild our User Guide from scratch. Furthermore, the User Guide is a medium-complexity project so it provides the chance to touch on several different areas but, at the same time, it wasn’t too complex for our current know how.

OpenShift brings several advantages over our previous approach:

- Clustered environment: OpenShift automatically provides as many replicas as required on different nodes, improving reliability

- Seamless updates: cluster updates can be completed from the user interface and the process, in case of any issues, doesn’t change more than one node a time, leaving the cluster in a consistent state

- Security: OpenShift enforces several security policies, in particular, pods must run as unprivileged and non-root

- Continuous deployment: with respect to our previous pipelines we have the chance to use the same infrastructure not just to build but also to host the User Guide

As mentioned above, OpenShift has several security requirements, so the first thing to do was to re-create from scratch our Docker image in order to meet them. We used an unprivileged NGINX version as a base image. Moreover, we also improved the build process in order to keep to a minimum the number of installed components: currently only compiled HTML files and few configuration files are installed now, reducing both the image size and also the attack surface.

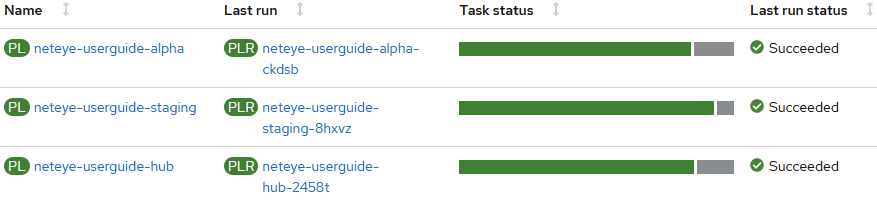

Next we re-created all the pipelines from scratch: in this case we had to completely change the process (we decided to directly create Docker images without any “intermediate” RPM packages). But to achieve this we had also to switch from Jenkins to Tekton.

Actually this change wasn’t an easy one: Jenkins is “old style” but it’s also really developer-oriented and comes with a lot of documentation. Tekton is mainly based on YAML configuration files through which you can invoke scripts, define all possible components, etc… It’s also much better for running parallel tasks, although unfortunately we did notice that some features that are out-of-the-box with Jenkins are still not available on Tekton, and we had to implement them on our own. Maybe we’ll cover this part in a dedicated blog post.

Furthermore we also had to integrate our OpenShift infrastructure with Bitbucket Cloud in order to trigger pipelines and push changes automatically.

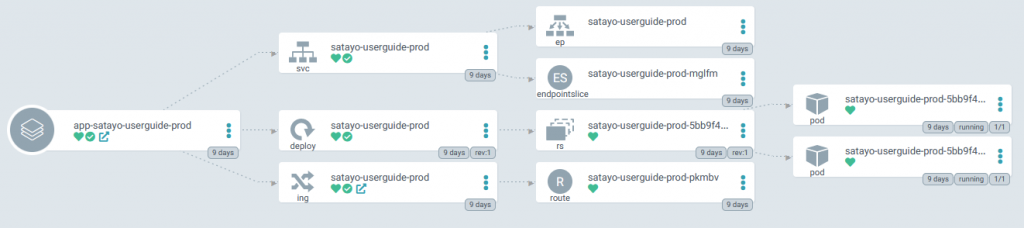

Finally we had to create apps to run the User Guide on OpenShift: this was the relatively simple part. As I mentioned, OpenShift Apps can be easily parametrized to improve availability. Furthermore, we introduced a new “smoke test” stage: this test is meant to deploy, on a separate test App, an actual version of the User Guide, allowing us to perform a complete end-to-end test (including firewalls, load-balancer and certificates) in order to be sure that our app will be properly exposed once we release it to production.

Release to production now is an automated step in our pipeline, and every change reached our users within 10 minutes from the “merge” click on Bitbucket.

In conclusion we successfully used our OpenShift cluster in production for the first time. We also reduced the release process from several hours to 10-20 minutes, mainly thanks to the huge workflow simplification, but also due to the new potential we introduced by having all OpenShift infrastructure as code and container-based.

These Solutions are Engineered by Humans

Did you read this article because you’re knowledgeable about networking? Do you have the skills necessary to manage networks? We’re currently hiring for roles like this as well as other roles here at Würth Phoenix.