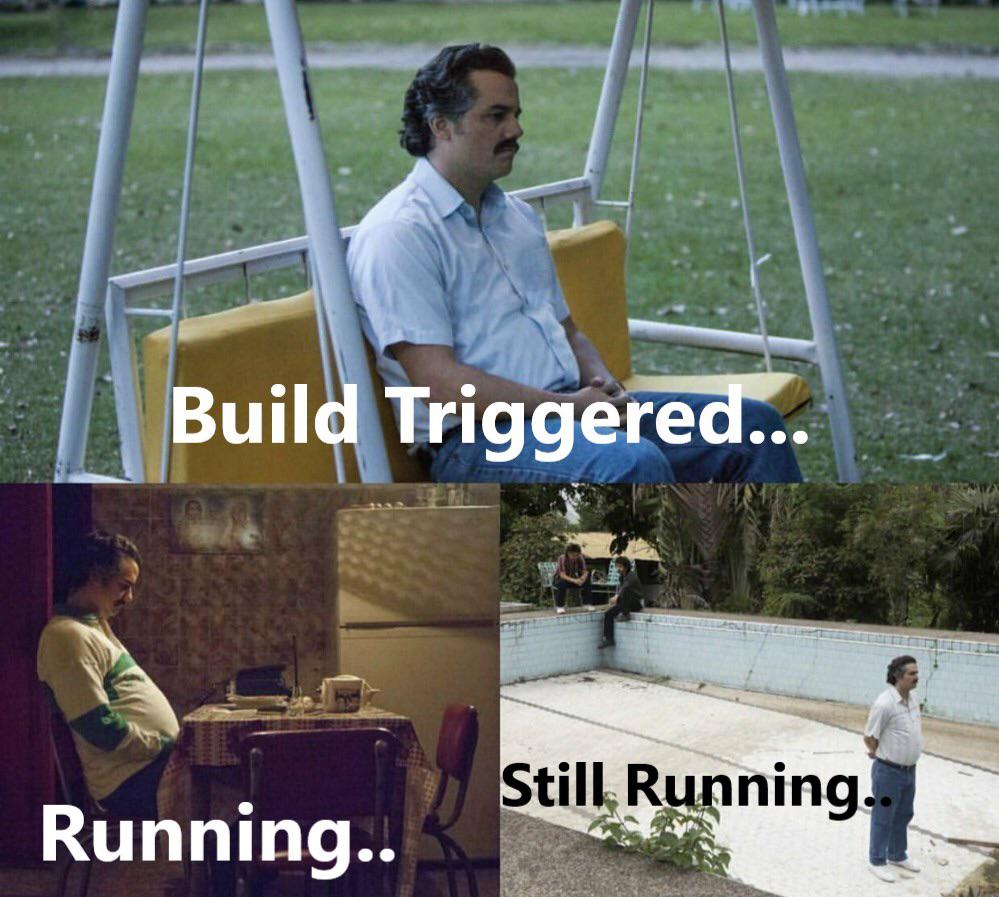

On February 3rd and 4th, 2024, we attended FOSDEM, a major event where thousands of free and open-source software developers from around the world gather to exchange ideas and collaborate. This year I dedicated much of the second day to the Testing and Continuous Delivery room. Continuous testing is essential for ensuring the safe and stable shipment of software during NetEye development. Unfortunately, the relationship with Continuous Integration (CI) is often a love and hate one.

Over time, I noticed a growing frustration within the team caused by both builds taking too long and flaky tests. I decided to get inspired and listen to others’ experiences. I immediately realized that our CI, compared to the rest of the world, had something wrong with it. At the end of the day, I felt a mix of emotions ranging from despair to anger. Why do our builds take more than 2 hours? Why are there so many flaky tests?

FOSDEM had given me new energy; I had to act in some way. So, after a few days, with a clearer mind, I began to rationalize and ask myself some questions to address the problem:

What are the longest stages in the pipeline?

Why do some tests fail randomly and how can they be solved?

Settle Down a Second

Before continuing, I need to provide more context on what and how we test NetEye. Every code change a developer makes gets built and installed in a NetEye ecosystem consisting of a master node with all feature modules enabled and two satellites. Hundreds of tests are then initialized and executed, and if they all pass, the code can go into production.

It was time to analyze our pipeline and its various stages:

30 minutes for master setup

30 minutes for satellite setup

50 minutes or more for testing

For the master setup, also known as neteye_secure_install, part of our team is already working to speed it up and parallelize it. So, I decided to investigate the other parts.

Why does it take so much time to set up two satellites? They’re lightweight, with few things installed; they should be fast…

It didn’t take me long to realize that at the end of the setup, many packages and feature modules were installed that shouldn’t be on the satellites, slowing everything down. What was happening?

The Speedup

To understand the problem, I first need to explain how tests are shipped: for each package (e.g. ‘icinga2’) there is a package with tests (e.g. ‘icinga2-devel‘). To install the devel package, a wildcard command *-devel was simply used. As a result, not only the necessary devel packages were installed, but also all the unnecessary ones, which in turn required non-devel packages. For example, elasticsearch-devel was installed, which in turn installed elasticsearch. What an incredible discovery!

By fixing the pipeline in a flash, the time was cut in half to 15 minutes.

I was very happy, but still not satisfied. I felt like something was slipping out of our grasp, and actually all of a sudden, we realized that it had always been right there, but we hadn’t ever noticed it: why were we configuring two satellites sequentially even though they are independent of each other?

So, we parallelized the setup of the two satellites, and voilà, the time was halved again to about 6 minutes.

I must say, it was very enjoyable to optimize this part, especially because it had an immediate and positive impact on the team and their work.

We gained about 25 minutes for each build, which may seem like a little bit, but it made a big difference over a day when multiple builds are executed.

This was just the beginning, and it gave new energy to the team. In a future post, I will tell you how this desire to improve the pipeline affected the testing part, so stay tuned.

These Solutions are Engineered by Humans

Did you find this article interesting? Does it match your skill set? Programming is at the heart of how we develop customized solutions. In fact, we’re currently hiring for roles just like this and others here at Würth Phoenix.